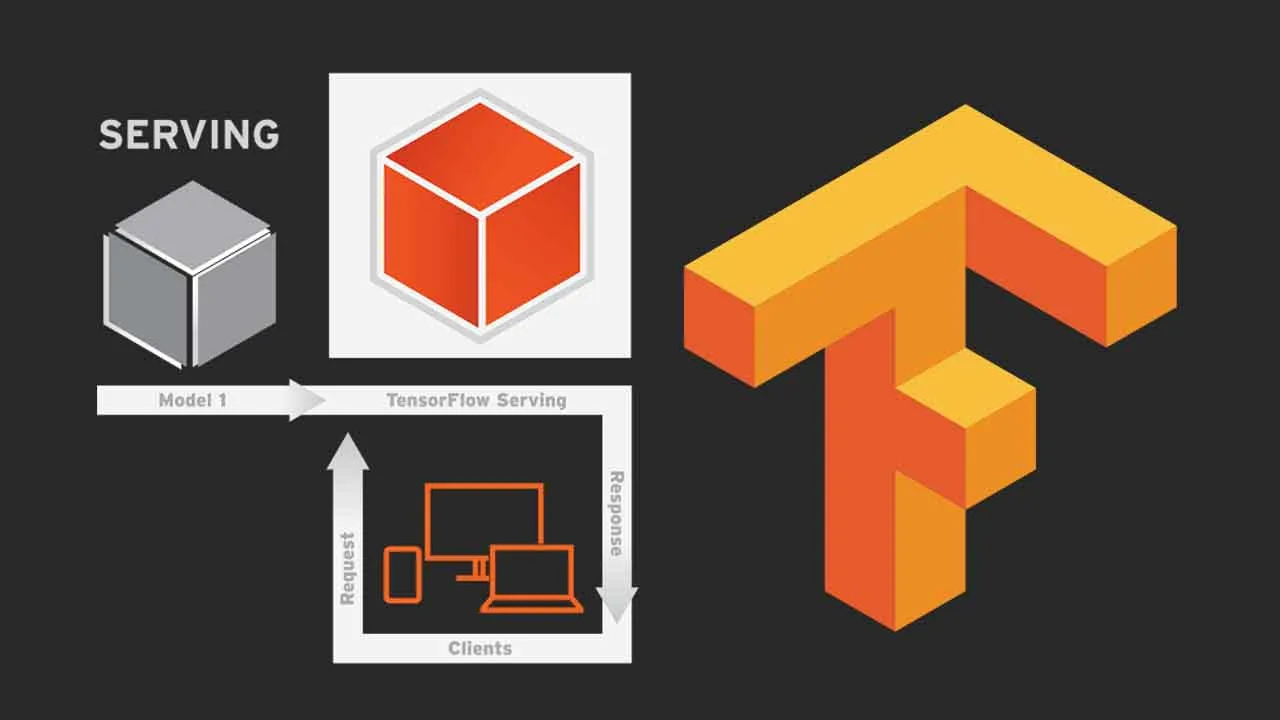

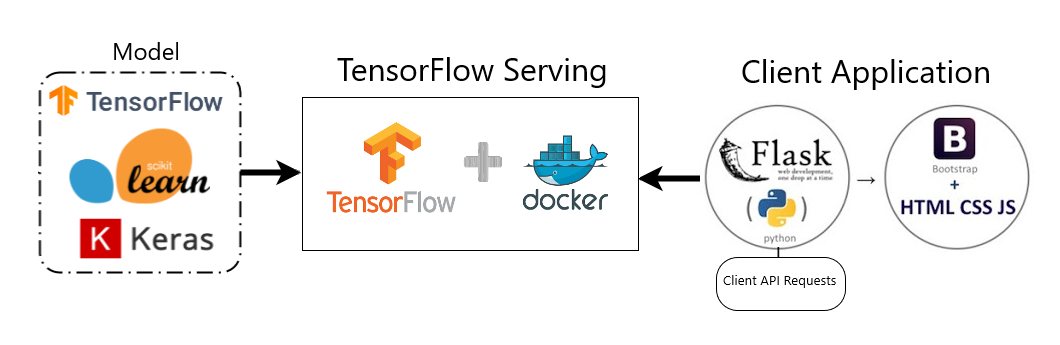

Tensorflow Serving by creating and using Docker images | by Prathamesh Sarang | Becoming Human: Artificial Intelligence Magazine

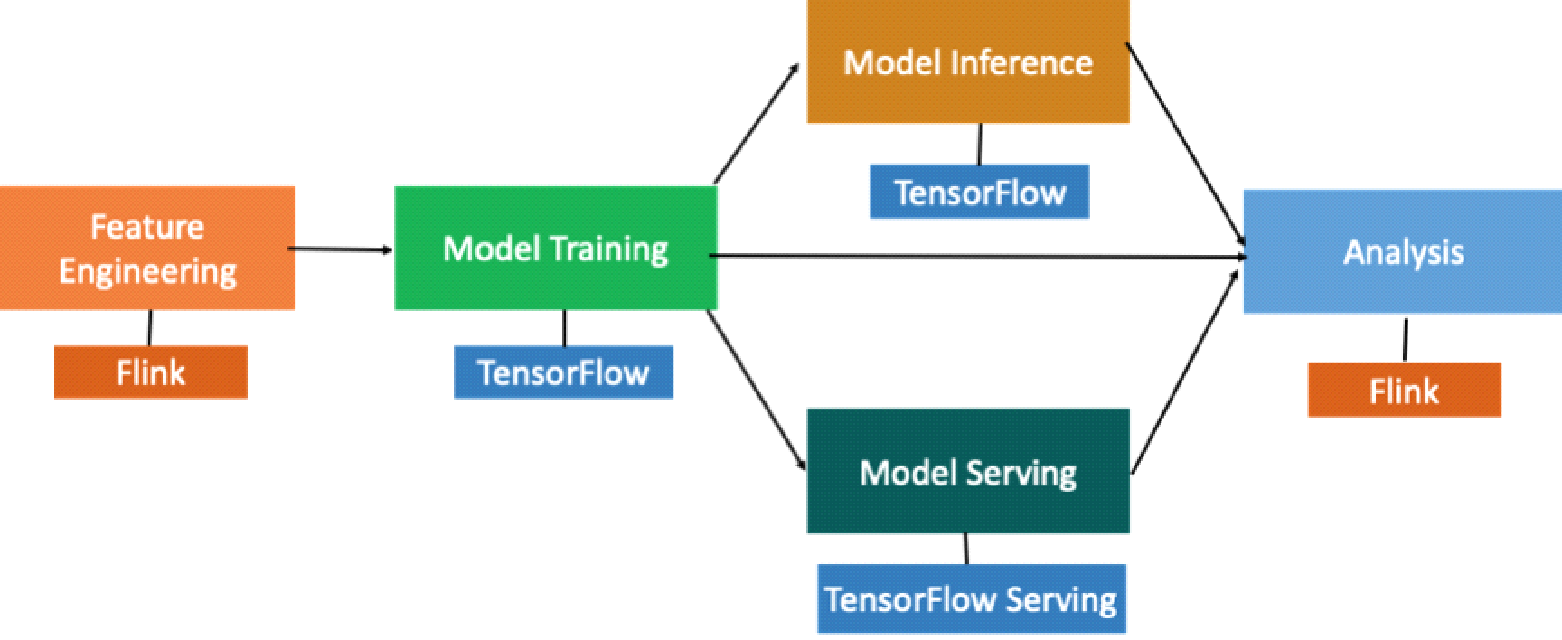

TensorFlow, TensorFlow Serving, and TensorFlow Transform for Linux on IBM Z and LinuxONE - IBM Z and LinuxONE Community

GitHub - tensorflow/serving: A flexible, high-performance serving system for machine learning models

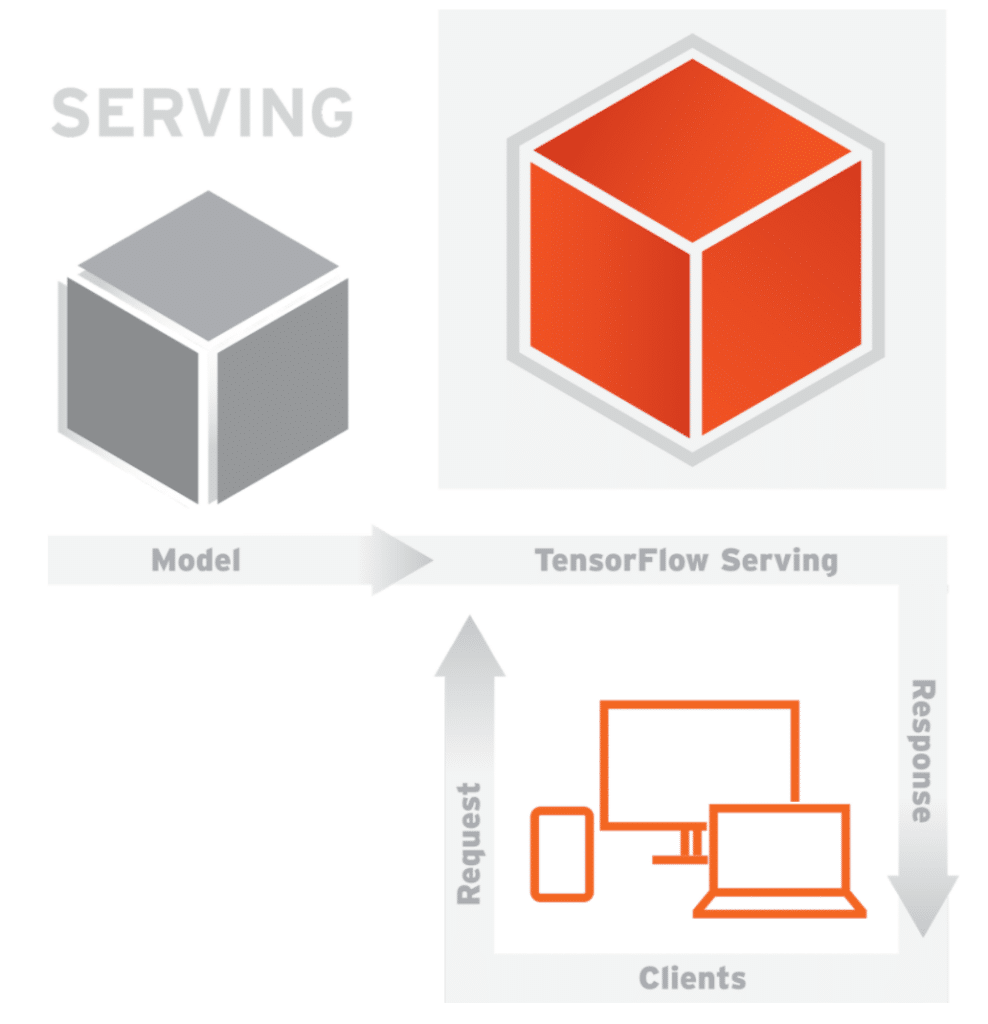

![PDF] TensorFlow-Serving: Flexible, High-Performance ML Serving | Semantic Scholar PDF] TensorFlow-Serving: Flexible, High-Performance ML Serving | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/cff81b22995937e1bf9533c800b24209932e402a/3-Figure1-1.png)